The Mexico City-based duo is now unleashing their retina-popping visuals and rapid-fire percussions and musical textures on a hybrid MUTEK MX + JP audience for the second time. It’s a chance to glimpse a bright future just when that vision is most needed.

We got to speak to Mali virtually as the MUTEK session prepares - and catch up after seeing what this duo dazzled us with a fantastic set at last summer’s collaborative Patchathon. As CNDSD, Mali is also known in live coding circles - working with environments that involve typing program code to spawn sonic materials - meaning this was also a chance to understand how that flat programming approach contrasts with physicality and spatiality.

Tell us what you’re doing for MUTEK’s edition? How does it relate to past iterations?

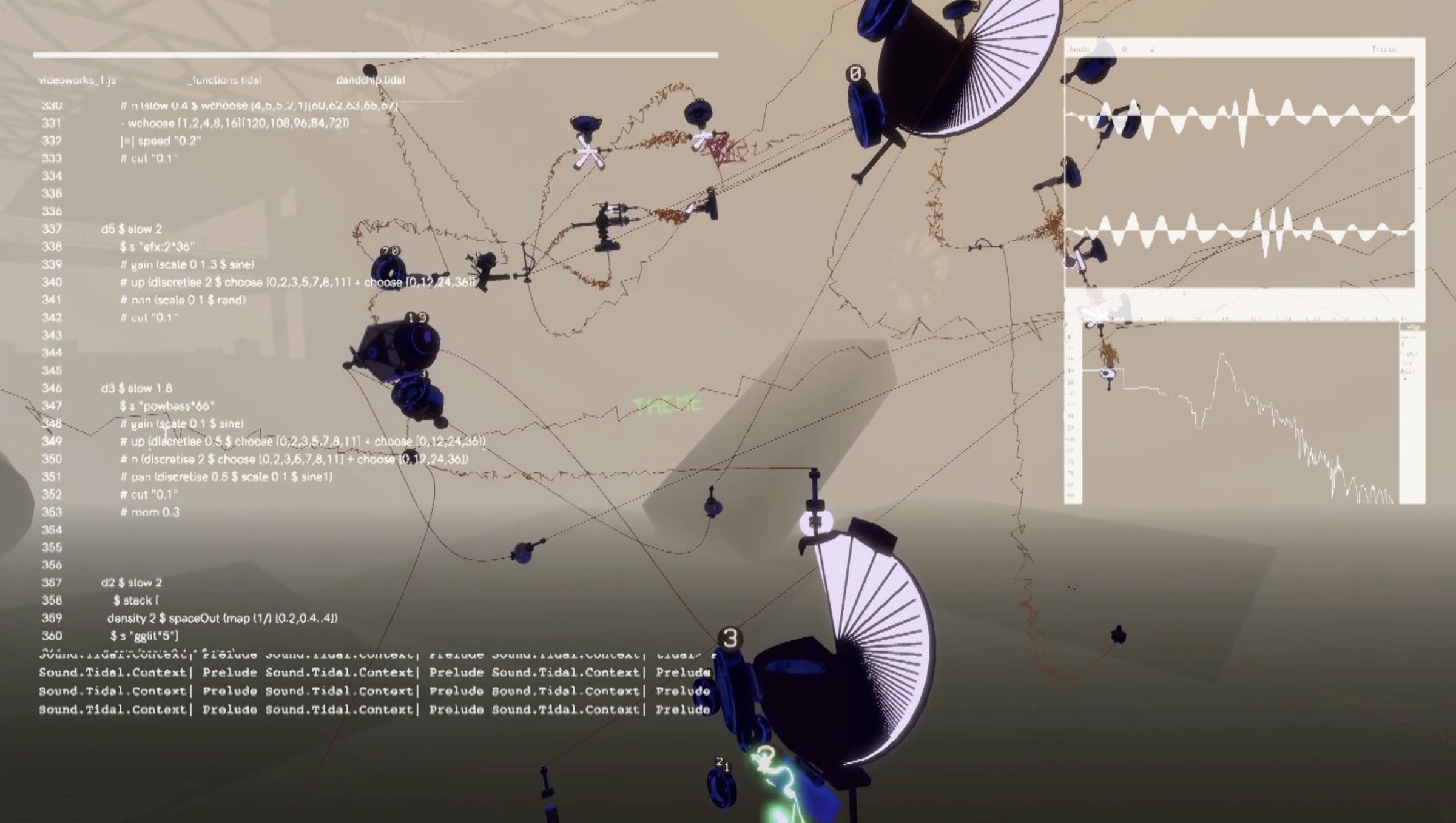

Mali: A constant in our work is making experimental music with the live coding environment TidalCycles. This time we are storytellers with Patch XR, too, with atmospheres of synths performed directly from that environment.

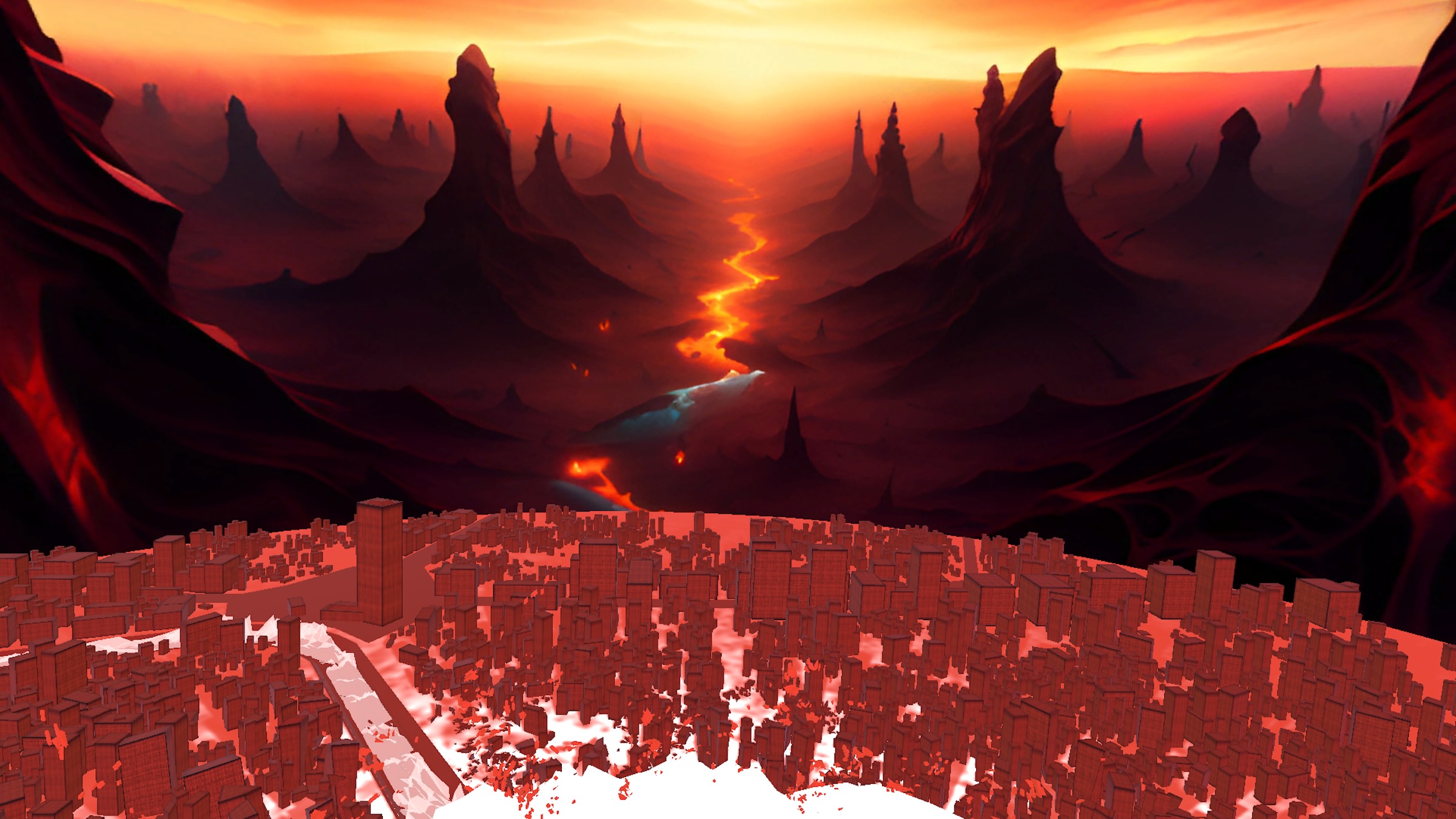

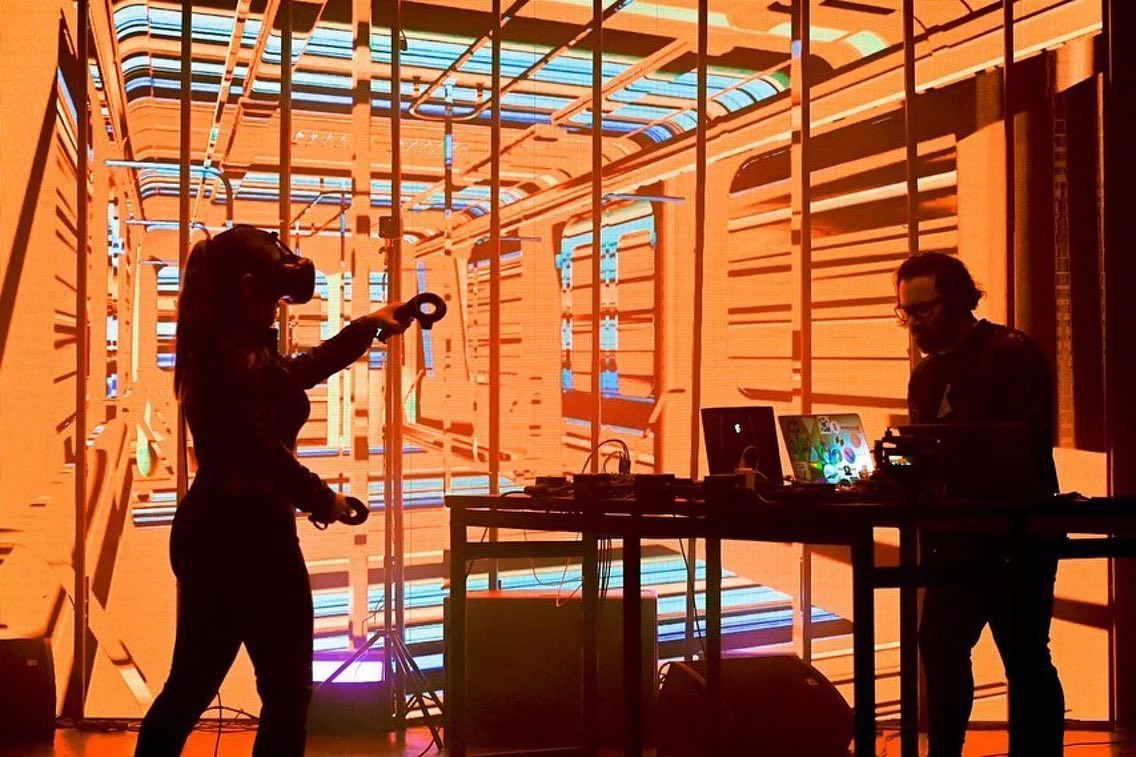

The act that will be presented in the Argentina - Barcelona MUTEK event is the “Desierta” act that we first presented in the Japan-Mexico edition. We performed this act in Mexico City just a few months ago; MUTEK production oversaw the recording of the act. It was quite a challenge. We departed from our usual format of two live coders on a table projecting visuals to work inside Patch XR instead.

How are you using PatchXR these days? How is it fitting into your way of working?

Right now, for a live act called “Tame Entities,” we are working on a new scenario – it’s a new piece that involves voice-over, processed singing, and a narrative closer to a video game. We’re modeling this environment and creating an avatar that will be the performer. One of the things I love most about being in Patch is making an instrument to play later, revisiting my old habits from when I used Pure Data [patching environment for multimedia]. That means applying the concepts of synthesis, adding delays and feedback, and trying to make an arrangement. It’s harmonic, but all this in a world parallel to the one I have on my computer screen.

I really enjoy this process! Ivan for his part is learning to use the tool – we are collaborators in all the pieces we create – and I think it is becoming mad! It has simply been the ideal tool to expand what we already did, compose handmade instruments, imagine narratives.

Your set was amazing in the Patchathon! What did you get out of that experience; has it informed any of these other versions of the performance? Or have you changed at all how you work since then?

Thank you! Tame Entities is precisely the evolution of that first experiment (cyberlive coder lol). I have been learning a lot from the Patch community; from Melodie who’s always available to provide support and from Eduardo, whom I always thank for sharing his tool. I think we share the dream of using other worlds and other materialities for performance.

This is controversial, too. Whenever I talk about the tool or the concert that is going to be presented at Mutek 2021, in some other interviews many people find the VR element to be – and I quote – “unnecessary,” “too much,” “inaccessible for most,” “accessory” …

I think that this taboo weighs like a ghost on virtual reality, even in the year 2021. It is still in people’s imagination, an excess that lives in the world of science fiction. I think that when you have the experience, those taboos disappear and instead the mind expands.

To ask an obvious question, what’s it like to put on that headset and take on these three-dimensional controllers? How is that when you’re in front of an audience … how is it when you’re fully virtual?

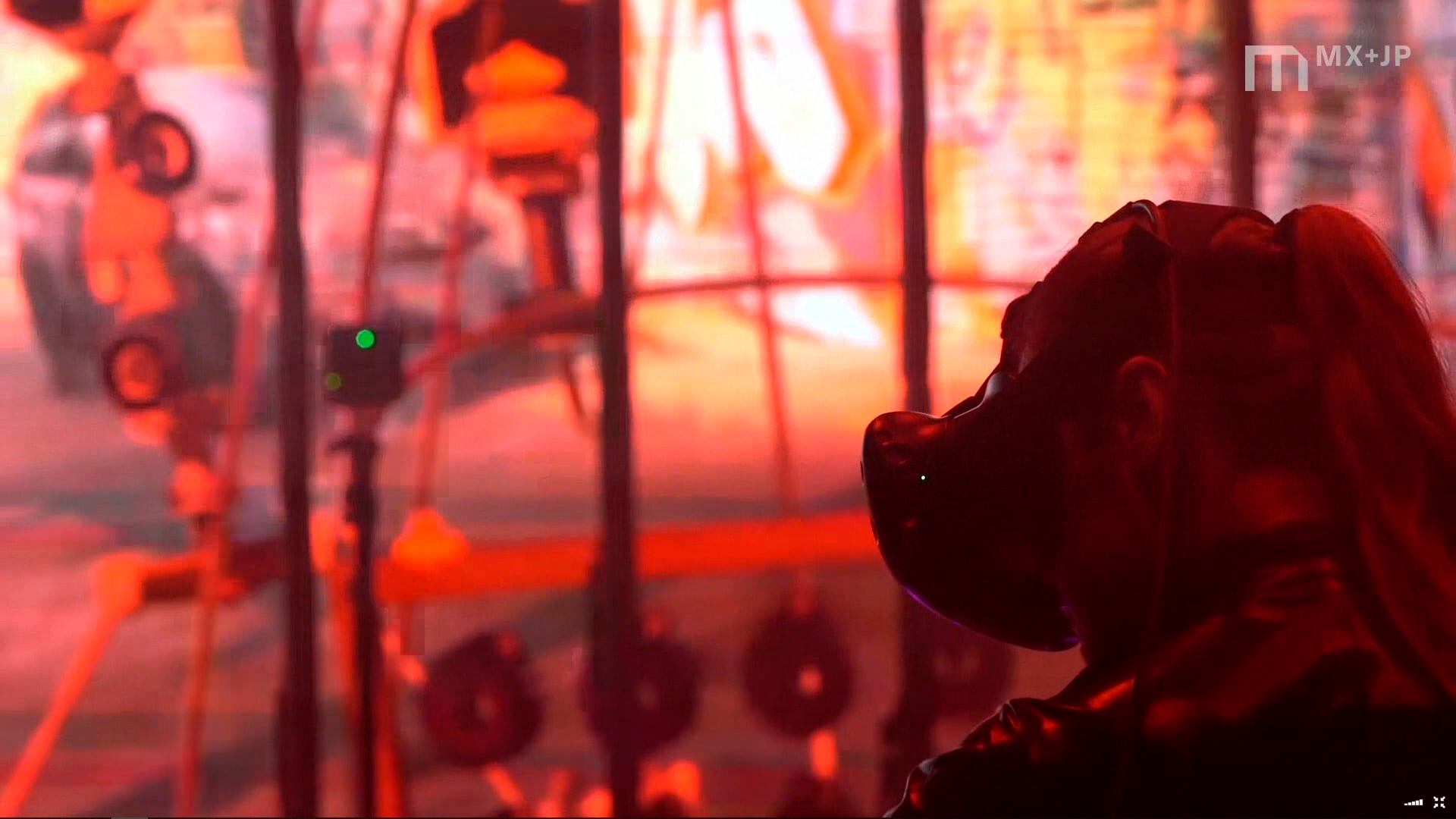

I lived this experience a little half when we recorded “Desierta”; there was little audience. In other editions of MUTEK, I faced the extreme nervousness caused by a very large audience that’s waiting for you to run your first code and hear something that blows their minds.

We were afraid something would go wrong. The tech stack was more complex than at other times – three computers, one for Patch XR only, one for external images, one for sound, all computers communicating with a router with OSC, and of course, an HTC VIVE.

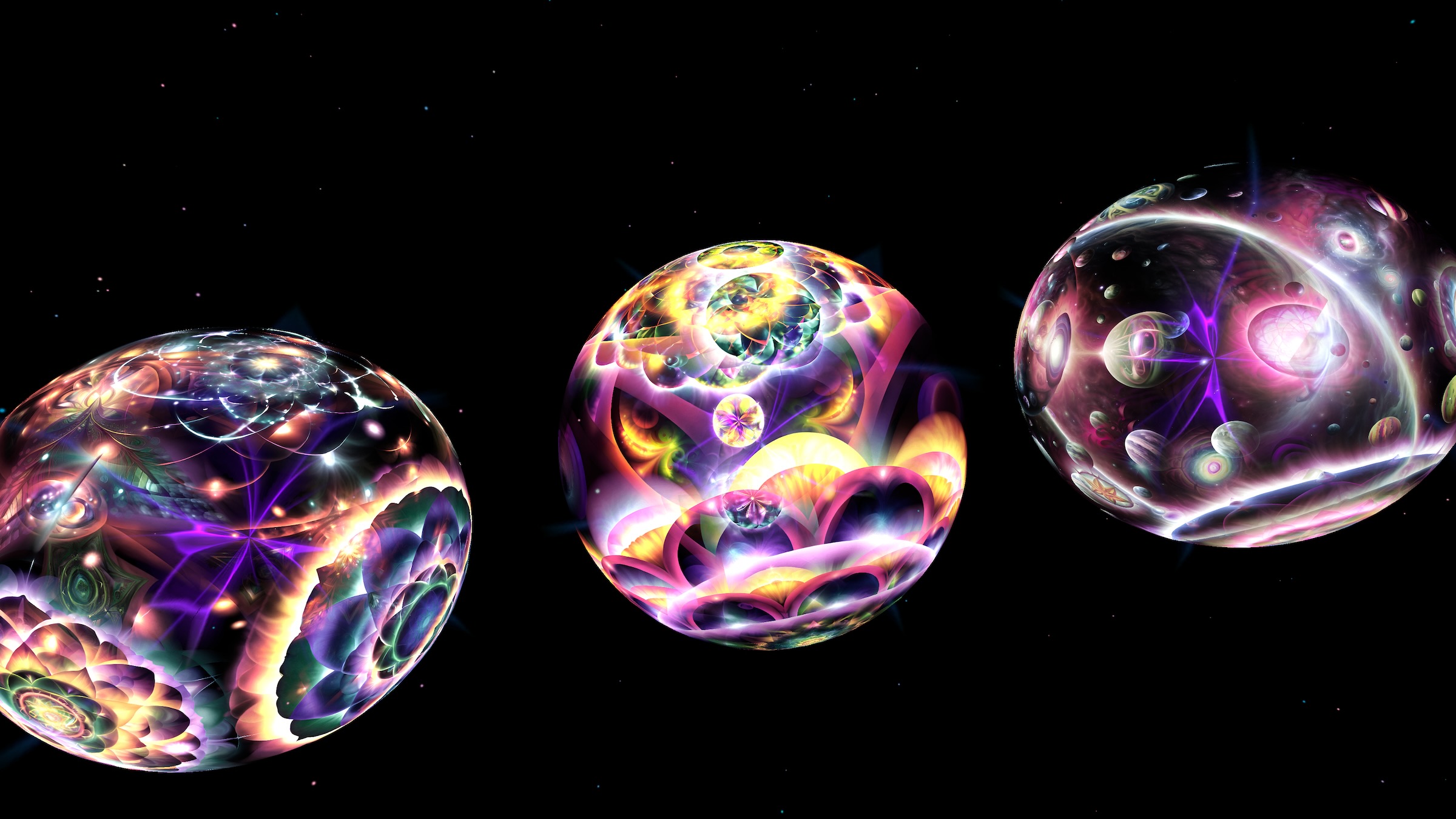

I must admit that after I started and started playing within the environment and listening to the first bits of code that Ivan was firing, I just started to enjoy it a lot. By the end, I was playing in a parallel world, without the people. When I finished, it was like coming back to reality, coming back from a dream.

You’re often when I see you transforming your artist identity as a virtual avatar / modified self, it seems. I feel like maybe that can be really liberating - our posthuman selves. Is that something meaningful with Patch?

You are definitely right. As an amateur gamer, every customizable character that I have, sometimes I try to make them look like me in some way – hair, clothes, freckles, something – and sometimes I really want to be someone else. I love being a muscular man, covered in tattoos, in some games.

With Patch, you can be a virtual entity. You can be a mushroom, for example. You are always an interpreter - that doesn’t change - but being able to change who you are within Patch allows you to imagine stories and characters.

The expansion of the digital self is very interesting. I think of environments like Mozilla Hubs or Second Life and I see how there are some people who really spend time in their virtual self before going to a concert.

So you obviously work with live coding stuff. Maybe you can talk about how that integrates (or contrasts) with Patch? What’s it mean for you to have this modular environment to work with even in VR?

Mali: Discovering Patch XR opened a new horizon for the performative research that Ivan and I have been doing for years, reflecting on laptop performance, showing the code, impregnating “all” the emotion in those lines and not in the physical incarnation. You can dance, yes, but definitely doing live coding requires skill and a lot of concentration. You are thinking or modifying an algorithm at that moment.

How objectionable can it be if you compare it to playing an instrument or if you play with a lot of contact microphones and your body? Since the way of making music and images was already too connected to the code, it has been a challenge to face the corporeality that Patch XR brings, as opposed to writing to make sound.

They are two contrasting positions. So we like and the game of having one foot in physical reality and the other foot in virtual reality. Live coding is very noble; you can control anything! And that excites us – what if the encoder modifies my reality, and the colors of my environment to the rhythm of a beat while I make a drone within the environment? That is what we are working on now.

Ivan: For me, it was like making an ensemble where the avatar (Mali) improvised with me, while I manipulated the video, providing synesthesia with the music. Now in the new interactions, the incidence of the encoder with the virtual world is greater. I can modify some parts of its reality from the outside. Personally, I wish that Mali could also execute its code from some objects within the XR patch in a physical way; we will see if it is possible.

For so many people in the pandemic, live performance has been reduced to really primitive stuff like performing over Zoom. So I wonder how it has impacted you working in VR when doing these remote and online performances?

Mali: In a way, the streaming format has allowed us to hybridize what happens outside the virtual reality environment – show the screen of the live coder, what I see with my eyes, the interactions I have with the VR environment. With a big LED screen, we were able to offer more different points of view on the performance in parallel, including the modifications exerted by the code in the environment.

The small audience for the recording – staff, artist friends who were also going to play, and some guests - gave us interesting feedback. There was sound and visual stimulation, but they were also fascinated by the body and hybrid interpretation, being able to see our code on the big screen and what I was doing.

I can’t wait to be able to do this with a bigger audience, We imagine so many formats where artists and viewers have a magnificent experience.

I must be very honest; the artist’s experience within the virtual world is simply incredible. It’s like uniting your video game experience and playing electronic music at the same time. For us musicians, that’s a damn drug. What I always think is how the public can share that immersive experience.

We’ll discover what will happen now in the residence on the fly; we’re honored to develop a visual and sound immersion in the magnificent dome and laboratory of ZKM. We wonder things like - where is the screen? Where do we place viewers? How do we spatialize the experience? Or if, in a more radical way, we record everything inside the lenses so that the user can navigate with their own glasses in that world and discover that they’re about to witness a concert full of underwater architecture and prehistoric whales. It’s exciting; we have many conjectures still unanswered.

More: